Setup Speech Recognizer

Editor

Add

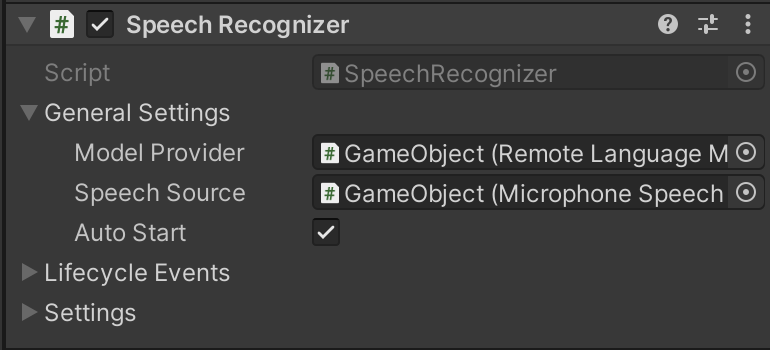

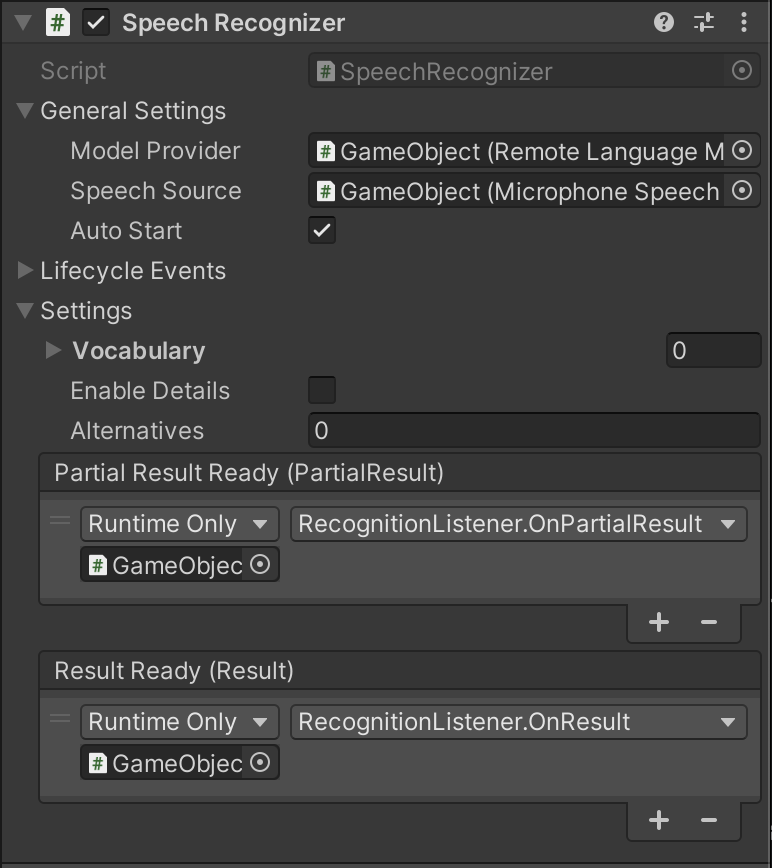

Speech Recognizercomponent to the scene, enable flagAuto Startand connect language model provider and speech source to it.

Now let's get the output:

Create a script called RecognitionListener.cs

using Recognissimo.Components; using UnityEngine; public class RecognitionListener : MonoBehaviour { public void OnPartialResult(PartialResult partialResult) { Debug.Log($"<color=yellow>{partialResult.partial}</color>"); } public void OnResult(Result result) { Debug.Log($"<color=green>{result.text}</color>"); } }Add the

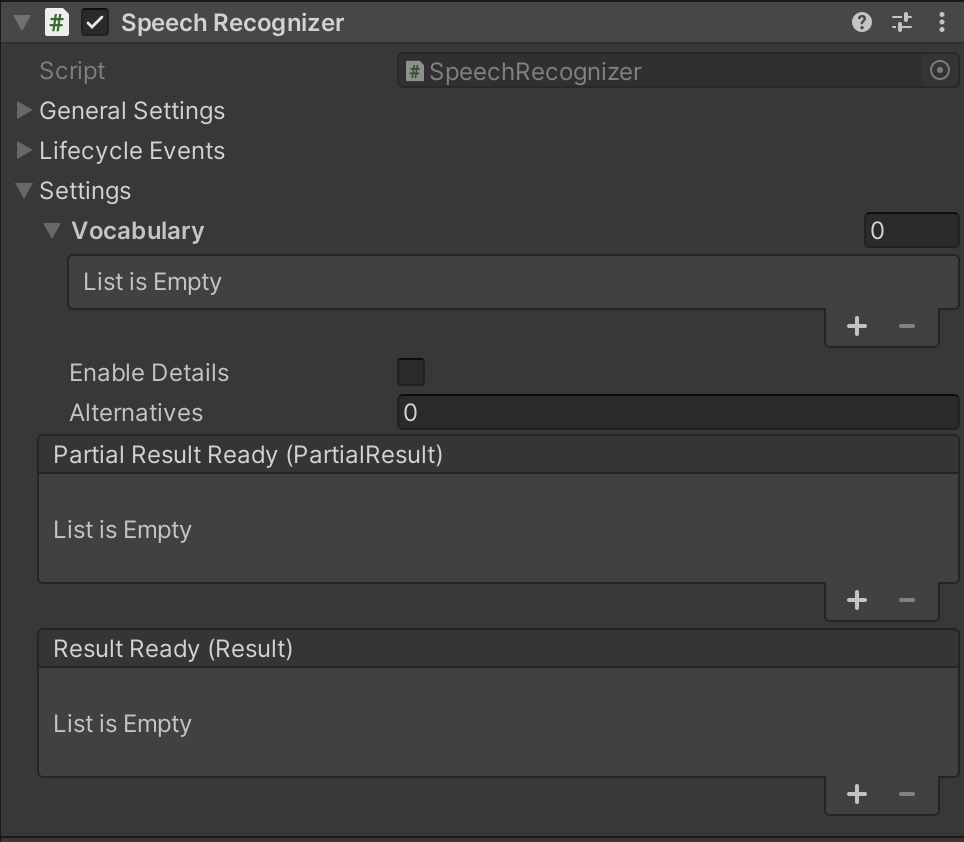

Recognition Listenercomponent and connect it to theSpeech Recognizerevents

Press Play. In the console window you should see the output

Scripting

using System.Collections.Generic;

using Recognissimo.Components;

using UnityEngine;

public class SpeechRecognizerExample : MonoBehaviour

{

private void Awake()

{

// Create components.

var speechRecognizer = gameObject.AddComponent<SpeechRecognizer>();

var languageModelProvider = gameObject.AddComponent<StreamingAssetsLanguageModelProvider>();

var speechSource = gameObject.AddComponent<MicrophoneSpeechSource>();

// Setup StreamingAssets language model provider.

// Set the language used for recognition.

languageModelProvider.language = SystemLanguage.English;

// Set paths to language models.

languageModelProvider.languageModels = new List<StreamingAssetsLanguageModel>

{

new() {language = SystemLanguage.English, path = "LanguageModels/en-US"},

new() {language = SystemLanguage.French, path = "LanguageModels/fr-FR"}

};

// Setup microphone speech source. The default settings can be left unchanged, but we will do it as an example.

speechSource.DeviceName = null;

speechSource.TimeSensitivity = 0.25f;

// Bind speech processor dependencies.

speechRecognizer.LanguageModelProvider = languageModelProvider;

speechRecognizer.SpeechSource = speechSource;

// Handle events.

speechRecognizer.PartialResultReady.AddListener(res => Debug.Log(res.partial));

speechRecognizer.ResultReady.AddListener(res => Debug.Log(res.text));

// Start processing.

speechRecognizer.StartProcessing();

}

}

How to use vocabulary

This feature may not be supported by some language models.

Vocabulary is a list of words available for speech recognizer. It is used to:

- simplify the recognition process by limiting the list of available words

- make speech recognizer output more predictable

- remove homophones

However, as the vocabulary definition implies, the speech recognition engine will try to match each spoken word with a word from the vocabulary, which is usually undesirable. To avoid this behavior, use the special word "[unk]" which means "unknown word". Then every spoken word that cannot be recognized with the existing dictionary will be marked as "[unk]" in the resulting string.

You can set vocabulary using:

UI (Speech Recognizer component)

script

speechRecognizer.Vocabulary = new List<string> { "one", "two three", "[unk]" };

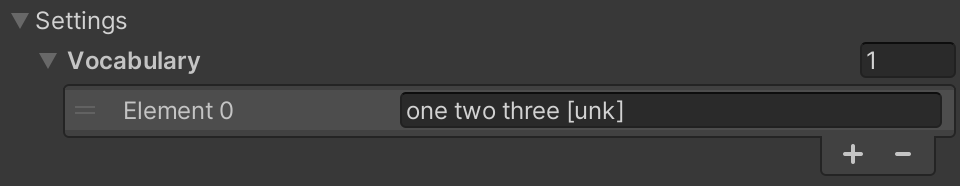

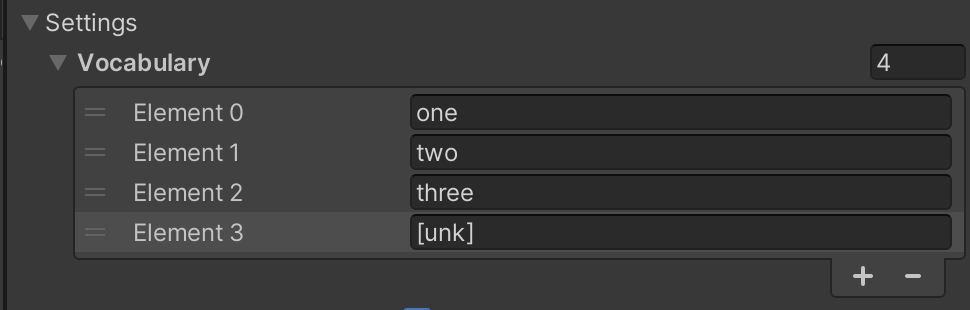

The order of the words doesn't matter. You can also use single string or multiple strings to describe the vocabulary. For example, the next vocabularies are the same: